1X World Model | From Video to Action: A New Way Robots Learn

The 1X World Model now serves as NEO’s cognitive core—enabling generalization to unseen tasks. Ask NEO anything and watch it attempt the task entirely autonomously.

At 1X, we build robots that help humans in the most diverse environment imaginable: people’s homes. To deploy our Redwood AI model safely and reliably, we need to anticipate its policy behavior across all that it can encounter. From retrieving a rarely-used kitchen gadget tucked away in a cluttered drawer, to navigating a living room unexpectedly rearranged overnight, physically evaluating each policy we've trained across these varied scenarios would take several lifetimes. How can we accelerate the evaluation of generalist robot models?

Today, we share progress on the 1X World Model (1XWM): a bridge between the world of atoms and the world of bits.

The 1X World Model enables NEO to anticipate the outcomes of robot actions and their consequences on the world.

Starting from the same initial state, 1XWM predicts four distinct futures for Neo when conditioned on four different low-level action sequences: grabbing a mug, wiping the countertop, stepping backwards, and playing an imaginary guitar.

This approach significantly accelerates experimentation, allowing us to evaluate the reliability and effectiveness of robotic policies in a fraction of the time, without requiring intensive, environment-specific engineering within traditional physics-based simulators or mock real-world sets.

In this post, we show:

The 1X World Model allows us to:

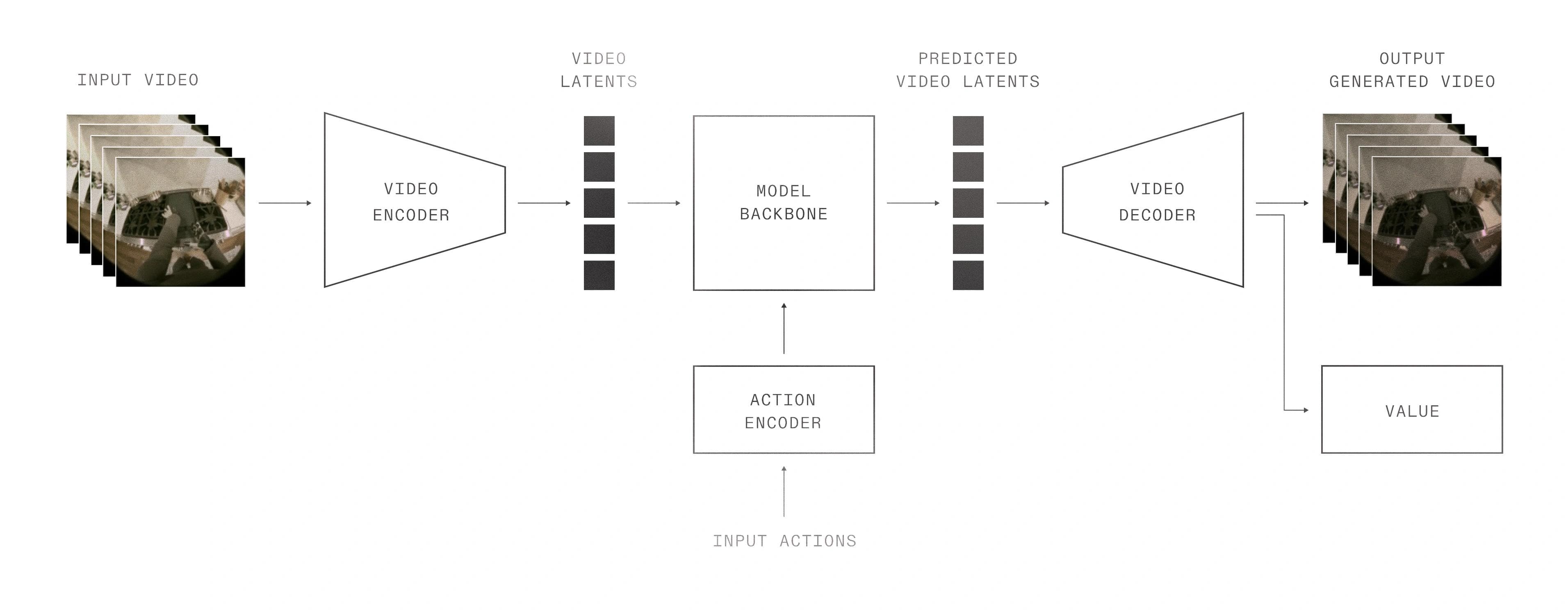

We train our world model on sequences of video frames, robot observations, and input action trajectories. We encode the inputs to latent representations, and predict the latent encodings of future frames. We also predict the state value of the final frame to evaluate task success and completion.

Most video generation models are text-to-video (T2V), using a language prompt to generate the video, and optionally, one or more reference frames for guidance. However, world models for simulating robots need to be action-controllable, steered by exact robot trajectories rather than loose directives like “Grab the mug” or “Wipe the countertop.” We demonstrate the action-controllability of 1XWM by providing it with a few initial frames of real footage along with multiple subsequent action trajectories. From this anchor point, the 1XWM simulates the consequences of taking those exact actions, including the physics of objects like doors being opened and cloths being wiped across a countertop.

Generation

Generation

Generation

Real

Real

Real

Does 1XWM improve as data is scaled up? What kinds of data best improve its understanding of physics?

To study this, we train 1XWM to not only predict future states and images, but also whether the task attempt succeeded or failed at the end of each generation. We see improvements in accuracy across the board as we scale up the number of tasks and the diversity of robot behaviors. We explore this in the following tasks: Airfryer, Arcade, and Shelf.

REAL

We observe a clear improvement in generation quality as we train on task-specific data. When confronted with an unfamiliar task and environment, the WM often struggles to model the object interactions exactly, without having knowledge of their specific properties. Training on task-specific data allows the WM to update based on the subtle dynamics of the task at hand.

For example, when trained on smaller amounts of data, 1XWM hallucinates the air fryer tray and body as a single unit, pulling the entire unit off the counter. After adding interaction data with the air fryer, 1XWM gains a better understanding of how the tray separates from the air fryer, and even learns to model subtle interactions such as the confinement of the tray movement within the base of the air fryer.

We also observe that training on both shelf and arcade data improves accuracy compared to training on shelf alone. This positive transfer of accuracy and task understanding from one task to another reinforces our belief in the capability of 1XWM on scaling.

The more task-oriented robotics data we accumulate, the more accurately we can predict task-level future outcomes. We then turn this capability into an evaluation engine as a novel application of world modeling for robotics development.

An aligned world model can solve the evaluation problem by forecasting the actions of candidate robot policies. Given 1X World Model generations from each policy on datasets of initial states, we can compare their respective performances. Importantly, we can curate datasets of production-setting states and generate counterfactual results from states that an autonomous policy has previously failed in.

For every set of model checkpoint weights, we predict future states and success likelihood that we show are distributionally aligned to actual real-world futures. This gives us insight into model performance at scale and allows us to make model architecture and checkpoint selection decisions with an instant feedback loop.

In the plot below, we ablate the decision to include proprioception (robot joint states) as input to our robot policy, and plot the 1X World Model predicted success rate for each checkpoint. We then run real world evaluation on the most and least promising checkpoints according to 1XWM. We find that there is indeed a correlation between the predicted success rates and the true task scores. This allows us to make a likely forecast that proprioception improves policy performance.

Given a true real-world success rate gap of 15% between two policies, a World Model with 70% accuracy can accurately predict the better policy with 90% success. Given that we see a consistent predicted performance gap across checkpoints, and that we can evaluate policies on identical starting states, we can be even more confident in such a verdict.

As another experiment, we compare using two different image encoders for a policy. We compare the most promising predicted checkpoints from both policies, and see that the predicted better ViT-L model does indeed perform better in the real world. For more experiments, see our technical report.

As we deploy robots in home, we will need to move away from task-specific evaluation and towards production-level evaluation, capable of handling a wider, more ambiguous array of full-body manipulation tasks and objects. Improving the generalization capability and accuracy of 1XWM will be the

first step towards this goal.

REAL

GENERATION

The 1XWM currently struggles to model interactions with held-out objects (such as this plant) not seen in training data.

We've shown promising results in scaling up the 1X World Model (1XWM) to predict the future. As we increase the amount of training compute and real world NEO data, 1XWM's accuracy of predicting whether NEO accomplishes a task also increases.

The implications of accurate hallucinated rollouts extend far beyond rigorous evaluation for humanoid robots. Consider the implications of what happens when the data generated by 1XWM – the joint distribution over all the sensor readings and actions observed by the robot – become indistinguishable from the real data. This moment has already happened for LLMs, and we think that it will also soon be true for synthetic robotics data. Data and evals are the cornerstone of solving autonomy, and 1XWM provides a unified path for tackling both challenges.

Opt in to receive updates. Unsubscribe anytime.